Past yesterday, 12th Jan 2015, Amazon announced the launching of the C4 instances.

Those are the most powerful instances from Amazon to the date.

The new C4 instances are based on the Intel Xeon E5-2666 v3 processors, code name Haswell.

Nowadays CPU’s have turbo and are able to run at more speed depending on several factors, like temperature, power consumption and heat generation (in some cases if two cores are used and the rest not, those two core can run faster), that’s why cmips stresses all the cores and 100% of the capacity of the Servers.

Those processors run at a base speed of 2.9 GHz, and can achieve clock up to 3.5 GHz speeds with Intel® Turbo Boost (complete specifications are available here).

As a result of that CMIPS can have little variation from one test to another, if the difference is small, we get the higher value.

turbostat command is provided to be able to manually try to set speeds of the cores.

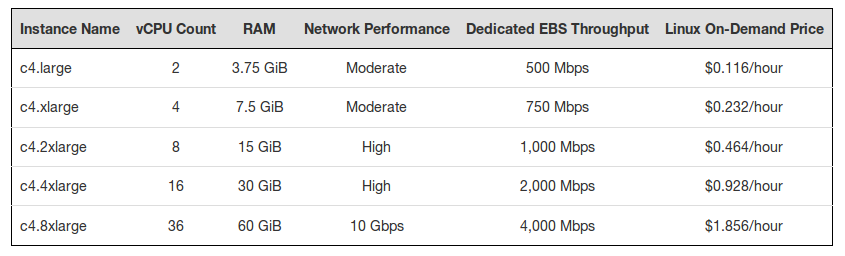

(prices are a bit different in some zones, those are for US and EU)

(prices are a bit different in some zones, those are for US and EU)

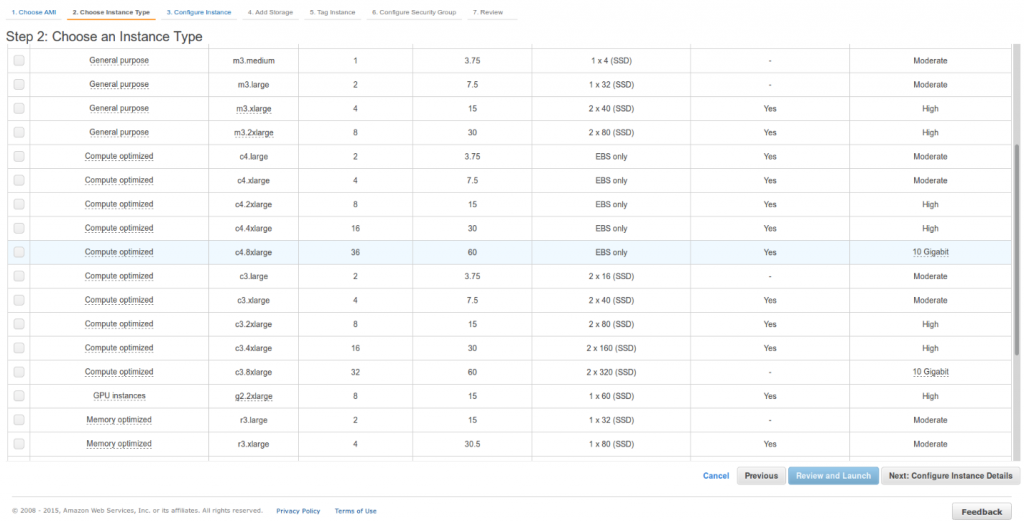

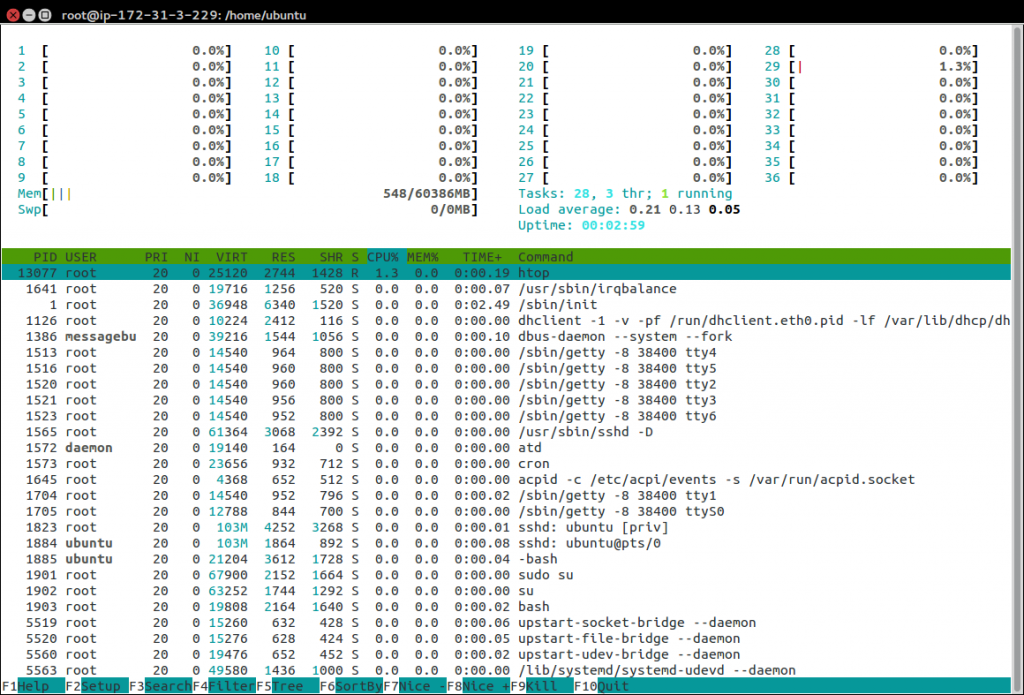

We tested the c4.8xlarge, to see the top scale.

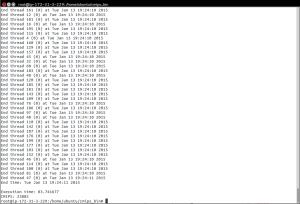

In our tests, the c4.8xlarge achieved 23,882 CMIPS.

That’s much much higher than the c3.8xlarge (that has 32 vCPU) that scores 17,476 CMIPS (CPU Intel(R) Xeon(R) CPU E5-2680 v2 @ 2.80GHz), with a similar price.

The results show that the c4.8xlarge is the most powerful instance we have seen to the date, and the number one in our rankings, only beaten by the CloudSigma‘s 4 x Intel Xeon E5-4657L v.2 multiprocessor dedicated Server provided for comparison with fullstack physical servers (with 48 cores and a score of 28,753 CMIPS).

This is the report from cpuinfo for one of the cores:

processor : 35 vendor_id : GenuineIntel cpu family : 6 model : 63 model name : Intel(R) Xeon(R) CPU E5-2666 v3 @ 2.90GHz stepping : 2 microcode : 0x25 cpu MHz : 1200.000 cache size : 25600 KB physical id : 1 siblings : 18 core id : 8 cpu cores : 9 apicid : 49 initial apicid : 49 fpu : yes fpu_exception : yes cpuid level : 13 wp : yes flags : fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush mmx fxsr sse sse2 ht syscall nx rdtscp lm constant_tsc rep_good nopl xtopology nonstop_tsc aperfmperf eagerfpu pni pclmulqdq monitor est ssse3 fma cx16 pcid sse4_1 sse4_2 x2apic movbe popcnt tsc_deadline_timer aes xsave avx f16c rdrand hypervisor lahf_lm abm ida xsaveopt fsgsbase bmi1 avx2 smep bmi2 erms invpcid bogomips : 5861.99 clflush size : 64 cache_alignment : 64 address sizes : 46 bits physical, 48 bits virtual

The tests were run in a Ubuntu 14.04 Server 64 bits (vps, hvm virtualization), in the zone Ireland and the storage type was gp2 (General Purpose SSD).

It is a instance equipped with 10Gbit, but I’m really shocked about storage’s performance, as with our standard test of dd on 5 GB file on the default filesystem (/dev/sda1), we got poor result:

dd if=/dev/zero of=cmips-speed-test.000 bs=1024 count=5000000

root@ip-172-31-3-229:/home/ubuntu/cmips_bin# dd if=/dev/zero of=cmips-speed-test.000 bs=1024 count=5000000 5000000+0 records in 5000000+0 records out 5120000000 bytes (5.1 GB) copied, 41.404 s, 124 MB/s dd if=/dev/zero of=cmips-speed-test.000 bs=1024 count=5000000 5000000+0 records in 5000000+0 records out 5120000000 bytes (5.1 GB) copied, 49.091 s, 104 MB/s root@ip-172-31-3-229:/home/ubuntu/cmips_bin# rm cmips-speed-test.000; dd if=/dev/zero of=cmips-speed-test.000 bs=1024 count=5000000 5000000+0 records in 5000000+0 records out 5120000000 bytes (5.1 GB) copied, 34.3242 s, 149 MB/s

As you saw, the throughput of the disks creating at 5GB file is really disappointing, specially taking in count that we are talking about SSD, that EBS Optimization is enabled by default and that “this feature provides 500 Mbps to 4,000 Mbps of dedicated throughput to EBS above and beyond the general purpose network throughput provided to the instance”. In other tests, with other instances from Amazon we have achieved 490 MB/second, so I’ve checked the documentation and I found that necessary steps may be necessary to benefit from enhanced network performance.

Also the max. throughput published in the documentation doesn’t match my previous results (much higher) and with the information published in the Amazon’s blog about “[…]500 Mbps to 4,000 Mbps[…]”. As publishing this article I tried with one of my running c3.4xlarge and got 201 MB/second (/dev/xvda1).

As the C4 are very new perhaps Amazon are still adjusting and fine-tuning performance.

I’m asking Amazon about that.

Update:

On 15th January I asked to Amazon ec2-c4-feedback [at] amazon.com:

Hi,

I’m Carles Mateo, CTO of several Start ups, and founder of cmips.net.

At cmips we benchmark the performance of the instances of the main Cloud Providers.

I just benchmarked your new c4.8xlarge and published an article http://www.cmips.net/2015/01/

I launched a Ubuntu 14.04 Server 64 bit, I did some basic storage performance tests that I do as standard always, and I got very disappointed about the performance and have some doubts.

I did:

dd if=/dev/zero of=cmips-speed-test.000 bs=1024 count=5000000

Obtaining:

root@ip-172-31-3-229:/home/

So between, 104 MB/s and 149 MB/s.

(The rm before the dd is for the TRIM SSD issue)

As with Amazon’s much more smaller machines I’ve obtained throughput around 490 MB/s with 5 GB files my questions are:

1) I got 490 MB/s with other instances, with Ubuntu as well, without EBS Optimisation and without any special tunning.

According to your page http://docs.aws.amazon.com/

1.1) Is that right?. Should I follow the steps to benefit from Enhanced Network on the VPC?

1.2) Does that Enhanced Network benefit to Storage also or is only for general networking (not storage) like Apache, ping, etc…?

2) Your page http://docs.aws.amazon.com/

2.1) Does that means the limit of the bandwidth or has to be added to the EBS standard throughput?

2.2) The document says that for a c3.4xlarge the maximum is 250 MB/s, but doing a dd on one of those I got 320 MB/s.

5000000+0 records in

5000000+0 records out

5120000000 bytes (5.1 GB) copied, 16.0044 s, 320 MB/s

How is that possible?

3) Your announcement of the c4 instances tells:

“As I noted in my original post, EBS Optimization is enabled by default for all C4 instance sizes. This feature provides 500 Mbps to 4,000 Mbps of dedicated throughput to EBS above and beyond the general purpose network”

3.1) 4,000 Mbps means 500 MB/s above and beyond the general purpose network, but your document http://docs.aws.amazon.com/

3.2) Are network and storage network sharing the same cables and infrastructure or are different ethernets/switches, etc…?

4) Do you implement any type of Storage cache at hypervisor level? And at Storage level?

5) is dd from /dev/zero with big files a good way to get the idea of the available bandwidth / throughput?. Do you recommend other mechanisms for getting the idea of the performance?.

Thanks.

Best,

Carles Mateo

On 19th January I got reply from Amazon:

Hi Carles,

Thanks for your feedback on C4!

Regarding (1), we currently expect that a single EBS GP2 volume can burst up to 128 MBps[1]. I believe the variation in your results is because the command you are using writes data out to the page cache. You are writing out enough data to exhaust the page cache so I believe the numbers you are getting are roughly accurate however for more consistent results, we would recommend using a tool like fio[2].

Indeed, the Instance Storage on some previous generation platforms can achieve higher results today but we announced improvements to EBS which you may be interested in at re:Invent[3].

Most popular AMIs enable Enhanced Networking by default and it does not have an impact on EBS performance.

The EBS Optimized limits are culmulative for all volumes, not for individual volumes.

Unfortunately, we cannot provide details about our underlying storage layer but if you have other questions about C4, I would be happy to answer them.

Thanks again for taking the time to provide feedback!

[1] http://docs.aws.amazon.com/

[2] http://docs.aws.amazon.com/

[3] https://aws.amazon.com/blogs/

Regards,

Pingback: Amazon wiped out one of my servers, they completely lost data | CMIPS