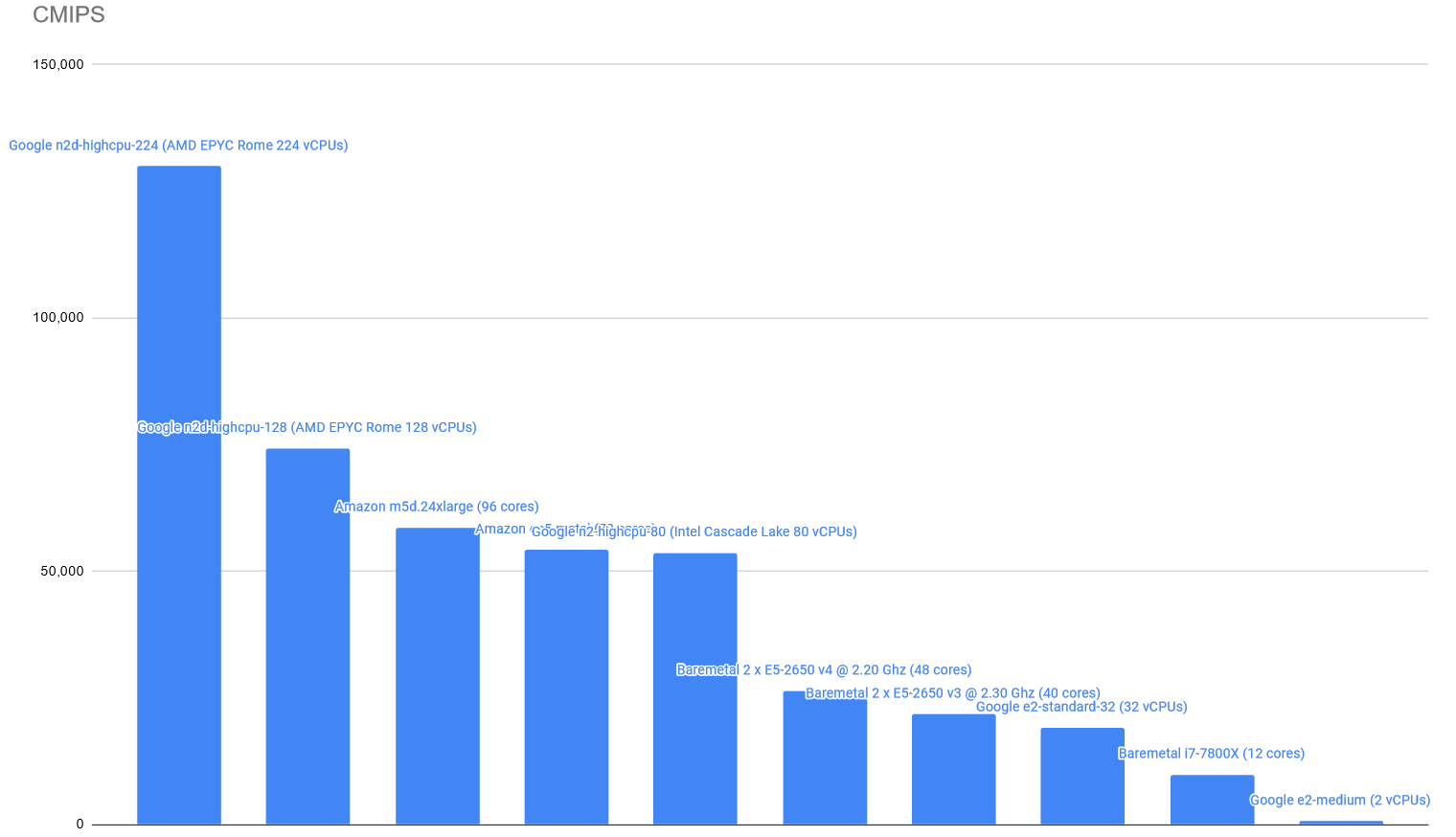

Some of the instances are equipped with the new AMD EPYC Rome CPUS.

In the case of Google’s n2d-highcpu-224 it comes with 224 Virtual CPUs (Cores).

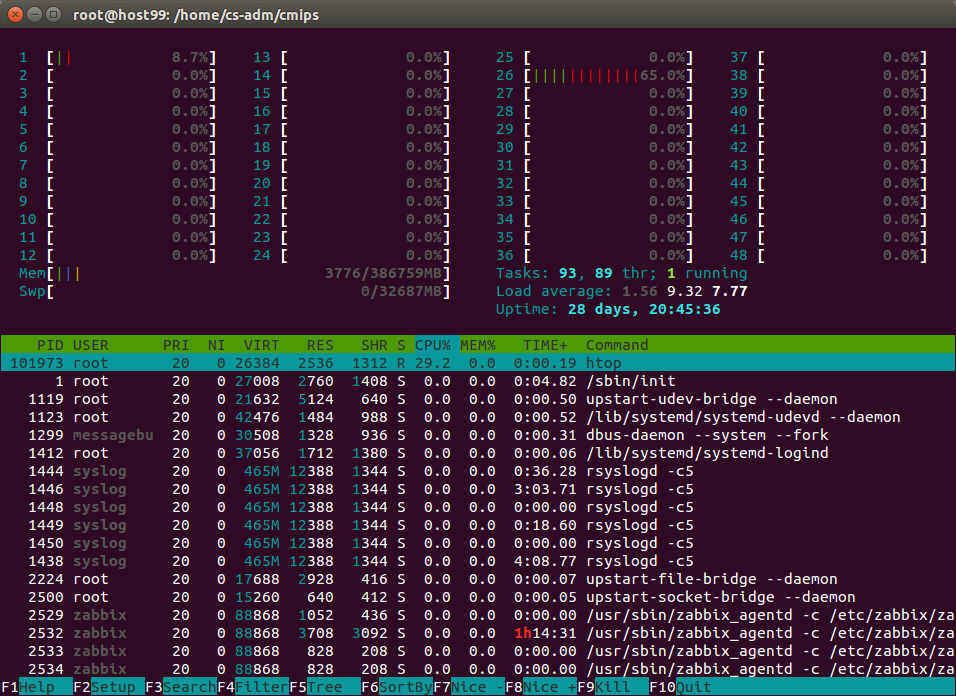

The CMIPS score is:

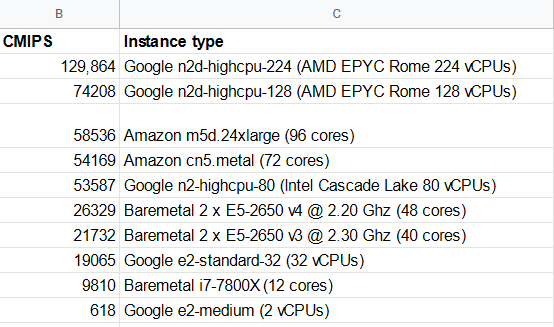

The latest instances from Amazon AWS Compute optimized compared versus two baremetals with 2 processors each, and one single Desktop Intel i7-7800X processor.

| CMIPS Score | Execution time (seconds) | Type of instance | Total cores | CPU model |

| 58536 | 34.16 | Amazon AWS m5d.24xlarge | 96 | Intel(R) Xeon(R) Platinum 8175M CPU @ 2.50GHz |

| 54169 | 36.92 | Amazon AWS c5n.metal | 72 | Intel(R) Xeon(R) Platinum 8124M CPU @ 3.00GHz |

| 26329 | 75.96 | Baremetal | 48 | Intel(R) Xeon(R) CPU E5-2650 v4 @ 2.20GHz |

| 21732 | 92.02 | Baremetal | 40 | Intel(R) Xeon(R) CPU E5-2650 v3 @ 2.30GHz |

| 9810 | 203.87 | Desktop computer | 12 | Intel(R) Core(TM) i7-7800X CPU @ 3.50 GHz |

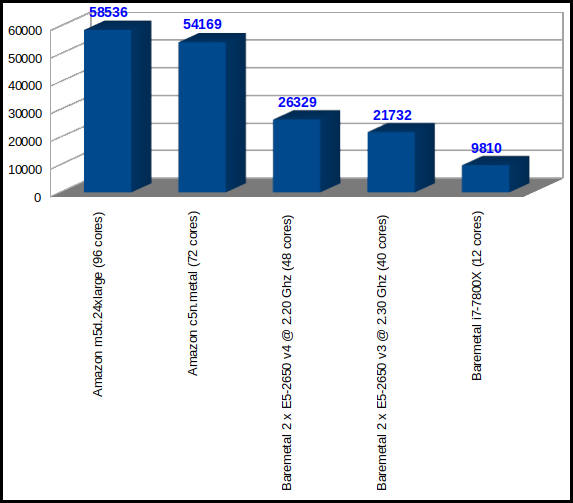

Past yesterday, 12th Jan 2015, Amazon announced the launching of the C4 instances.

Those are the most powerful instances from Amazon to the date.

The new C4 instances are based on the Intel Xeon E5-2666 v3 processors, code name Haswell.

Nowadays CPU’s have turbo and are able to run at more speed depending on several factors, like temperature, power consumption and heat generation (in some cases if two cores are used and the rest not, those two core can run faster), that’s why cmips stresses all the cores and 100% of the capacity of the Servers.

Those processors run at a base speed of 2.9 GHz, and can achieve clock up to 3.5 GHz speeds with Intel® Turbo Boost (complete specifications are available here).

As a result of that CMIPS can have little variation from one test to another, if the difference is small, we get the higher value.

turbostat command is provided to be able to manually try to set speeds of the cores.

(prices are a bit different in some zones, those are for US and EU)

(prices are a bit different in some zones, those are for US and EU)

We tested the c4.8xlarge, to see the top scale.

In our tests, the c4.8xlarge achieved 23,882 CMIPS.

That’s much much higher than the c3.8xlarge (that has 32 vCPU) that scores 17,476 CMIPS (CPU Intel(R) Xeon(R) CPU E5-2680 v2 @ 2.80GHz), with a similar price.

The results show that the c4.8xlarge is the most powerful instance we have seen to the date, and the number one in our rankings, only beaten by the CloudSigma‘s 4 x Intel Xeon E5-4657L v.2 multiprocessor dedicated Server provided for comparison with fullstack physical servers (with 48 cores and a score of 28,753 CMIPS).

This is the report from cpuinfo for one of the cores:

processor : 35 vendor_id : GenuineIntel cpu family : 6 model : 63 model name : Intel(R) Xeon(R) CPU E5-2666 v3 @ 2.90GHz stepping : 2 microcode : 0x25 cpu MHz : 1200.000 cache size : 25600 KB physical id : 1 siblings : 18 core id : 8 cpu cores : 9 apicid : 49 initial apicid : 49 fpu : yes fpu_exception : yes cpuid level : 13 wp : yes flags : fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush mmx fxsr sse sse2 ht syscall nx rdtscp lm constant_tsc rep_good nopl xtopology nonstop_tsc aperfmperf eagerfpu pni pclmulqdq monitor est ssse3 fma cx16 pcid sse4_1 sse4_2 x2apic movbe popcnt tsc_deadline_timer aes xsave avx f16c rdrand hypervisor lahf_lm abm ida xsaveopt fsgsbase bmi1 avx2 smep bmi2 erms invpcid bogomips : 5861.99 clflush size : 64 cache_alignment : 64 address sizes : 46 bits physical, 48 bits virtual

The tests were run in a Ubuntu 14.04 Server 64 bits (vps, hvm virtualization), in the zone Ireland and the storage type was gp2 (General Purpose SSD).

It is a instance equipped with 10Gbit, but I’m really shocked about storage’s performance, as with our standard test of dd on 5 GB file on the default filesystem (/dev/sda1), we got poor result:

dd if=/dev/zero of=cmips-speed-test.000 bs=1024 count=5000000

root@ip-172-31-3-229:/home/ubuntu/cmips_bin# dd if=/dev/zero of=cmips-speed-test.000 bs=1024 count=5000000 5000000+0 records in 5000000+0 records out 5120000000 bytes (5.1 GB) copied, 41.404 s, 124 MB/s dd if=/dev/zero of=cmips-speed-test.000 bs=1024 count=5000000 5000000+0 records in 5000000+0 records out 5120000000 bytes (5.1 GB) copied, 49.091 s, 104 MB/s root@ip-172-31-3-229:/home/ubuntu/cmips_bin# rm cmips-speed-test.000; dd if=/dev/zero of=cmips-speed-test.000 bs=1024 count=5000000 5000000+0 records in 5000000+0 records out 5120000000 bytes (5.1 GB) copied, 34.3242 s, 149 MB/s

As you saw, the throughput of the disks creating at 5GB file is really disappointing, specially taking in count that we are talking about SSD, that EBS Optimization is enabled by default and that “this feature provides 500 Mbps to 4,000 Mbps of dedicated throughput to EBS above and beyond the general purpose network throughput provided to the instance”. In other tests, with other instances from Amazon we have achieved 490 MB/second, so I’ve checked the documentation and I found that necessary steps may be necessary to benefit from enhanced network performance.

Also the max. throughput published in the documentation doesn’t match my previous results (much higher) and with the information published in the Amazon’s blog about “[…]500 Mbps to 4,000 Mbps[…]”. As publishing this article I tried with one of my running c3.4xlarge and got 201 MB/second (/dev/xvda1).

As the C4 are very new perhaps Amazon are still adjusting and fine-tuning performance.

I’m asking Amazon about that.

Update:

On 15th January I asked to Amazon ec2-c4-feedback [at] amazon.com:

Hi,

I’m Carles Mateo, CTO of several Start ups, and founder of cmips.net.

At cmips we benchmark the performance of the instances of the main Cloud Providers.

I just benchmarked your new c4.8xlarge and published an article http://www.cmips.net/2015/01/

I launched a Ubuntu 14.04 Server 64 bit, I did some basic storage performance tests that I do as standard always, and I got very disappointed about the performance and have some doubts.

I did:

dd if=/dev/zero of=cmips-speed-test.000 bs=1024 count=5000000

Obtaining:

root@ip-172-31-3-229:/home/

So between, 104 MB/s and 149 MB/s.

(The rm before the dd is for the TRIM SSD issue)

As with Amazon’s much more smaller machines I’ve obtained throughput around 490 MB/s with 5 GB files my questions are:

1) I got 490 MB/s with other instances, with Ubuntu as well, without EBS Optimisation and without any special tunning.

According to your page http://docs.aws.amazon.com/

1.1) Is that right?. Should I follow the steps to benefit from Enhanced Network on the VPC?

1.2) Does that Enhanced Network benefit to Storage also or is only for general networking (not storage) like Apache, ping, etc…?

2) Your page http://docs.aws.amazon.com/

2.1) Does that means the limit of the bandwidth or has to be added to the EBS standard throughput?

2.2) The document says that for a c3.4xlarge the maximum is 250 MB/s, but doing a dd on one of those I got 320 MB/s.

5000000+0 records in

5000000+0 records out

5120000000 bytes (5.1 GB) copied, 16.0044 s, 320 MB/s

How is that possible?

3) Your announcement of the c4 instances tells:

“As I noted in my original post, EBS Optimization is enabled by default for all C4 instance sizes. This feature provides 500 Mbps to 4,000 Mbps of dedicated throughput to EBS above and beyond the general purpose network”

3.1) 4,000 Mbps means 500 MB/s above and beyond the general purpose network, but your document http://docs.aws.amazon.com/

3.2) Are network and storage network sharing the same cables and infrastructure or are different ethernets/switches, etc…?

4) Do you implement any type of Storage cache at hypervisor level? And at Storage level?

5) is dd from /dev/zero with big files a good way to get the idea of the available bandwidth / throughput?. Do you recommend other mechanisms for getting the idea of the performance?.

Thanks.

Best,

Carles Mateo

On 19th January I got reply from Amazon:

Hi Carles,

Thanks for your feedback on C4!

Regarding (1), we currently expect that a single EBS GP2 volume can burst up to 128 MBps[1]. I believe the variation in your results is because the command you are using writes data out to the page cache. You are writing out enough data to exhaust the page cache so I believe the numbers you are getting are roughly accurate however for more consistent results, we would recommend using a tool like fio[2].

Indeed, the Instance Storage on some previous generation platforms can achieve higher results today but we announced improvements to EBS which you may be interested in at re:Invent[3].

Most popular AMIs enable Enhanced Networking by default and it does not have an impact on EBS performance.

The EBS Optimized limits are culmulative for all volumes, not for individual volumes.

Unfortunately, we cannot provide details about our underlying storage layer but if you have other questions about C4, I would be happy to answer them.

Thanks again for taking the time to provide feedback!

[1] http://docs.aws.amazon.com/

[2] http://docs.aws.amazon.com/

[3] https://aws.amazon.com/blogs/

Regards,

I want to add some new benchmarks of a dedicated Server, to compare with the cloud instances’ power.

Thanks to my friends at CloudSigma that were so kind to invite me to their offices, and provided me with everything I needed and gave me total access to this powerful server for the tests.

The Server is a four CPU Intel Xeon, concretely the CPUs are the new Intel® Xeon® Processor E5-4657L v2 (30M Cache, 2.40 GHz) , released on the first Quarter of 2014.

This CPU has 12 core (#24 Threads), and performs from 2.4 Ghz to 2.9 Ghz with the Turbo, and has 30 MB of Intel Smart Cache and Max TDP is only 115W.

So this Server has a total of 12 core x 4 CPUs = 48 cores.

The Server was equipped with 384 GB of RAM, SSD disks and fibre cards, and I analyzed more performance aspects, but this is irrelevant for the CPU speed tests presented here.

The Server was equipped with 384 GB of RAM, SSD disks and fibre cards, and I analyzed more performance aspects, but this is irrelevant for the CPU speed tests presented here.

In the several tests this Server achieved the astonishing score of 28,753 CMIPS, and the tests took 34.7779 seconds, smashing the previous records.

This is the best score achieved so far, and the most powerful dedicated Server evaluated to the date. Comparing the CMIPS score, it is much much more powerful than the Amazon cluster instance c3.8xlarge, the most powerful instance tested to the date. And it doesn’t require any special cluster’s kernel.

If you have a main Master MySql Server or other CPU hungry service you would probably want to have one of these as dedicated Server, and having your FrontEnd Servers in the Cloud, as instances, auto-scaling, connecting to this beast in an hybrid configuration.

Amazon has reduced up to 40% their prices, with effect today 1st April.

Note: I noticed t1.micro, hi1.4xlarge and cr1.8xlarge prices didn’t change.

Detailed results, with pricing update. More CMIPS score is better:

| Type of Service | Provider | Name of the product | Codename | Zone | Processor | Ghz Processor | Cores (from htop) | RAM (GB) | Os tested | CMIPS | Execution time (seconds) | USD /hour | USD /month |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Cloud |  |

T1 Micro | t1.micro | US East | Intel Xeon E5-2650 | 2 | 1 | 0.613 | Ubuntu Server 13.04 64 bits | 49 | 20,036.7s | $0.02 | $14.40 |

| Cloud |  |

M1 Small | m1.small | US East | Intel Xeon E5-2650 | 2 | 1 | 1.6 | Ubuntu Server 13.04 64 bits | 203 | 4,909.89s | $0.044 | $31.68 |

| Cloud | GoGrid | Extra Small (512 MB) | Extra Small | US-East-1 | Intel Xeon E5520 | 2.27 | 1 | 0.5 | Ubuntu Server 12.04 64 bits | 441 | 2,265.14s | $0.04 | $18.13 |

| Physical (laptop) | Intel SU4100 | 1.4 | 2 | 4 | Ubuntu Desktop 12.04 64 bits | 460 | 2,170.32s | ||||||

| Cloud |  |

1 Core / 1 Ghz | 1 Core / 1 Ghz | Zurich (Europe) | Amd Opteron 6380 | 2.5 Ghz to 3.4 Ghz with Turbo | 1 | 1 | Ubuntu Server 12.04.3 64 bits | 565 to 440 | 1,800s | $0.04475 | $32,22 |

| Cloud |  |

M1 Large | m1.large | US East | Intel Xeon E5-2650 | 2 | 2 | 7.5 | Ubuntu 13.04 64 bits | 817 | 1,223.67s | $0.175 | $126 |

| Cloud | Linode | 1x priority (smallest) | 1x priority | London | Intel Xeon E5-2670 | 2.6 | 8 | 1 | Ubuntu Server 12.04 64 bits | 1,427 | 700.348s | n/a | $20 |

| Cloud |  |

M1 Extra Large | m1.xlarge | US East | Intel Xeon E5-2650 | 2 | 4 | 15 | Ubuntu 13.04 64 bits | 1,635 | 606.6s | $0.35 | $252 |

| Cloud | LunaCloud | 8 Core 1.5 Ghz, 512 MB RAM, 10 GB SSD | CH | 1.5 | 8 | 0.5 | Ubuntu 13.10 64 bits | 1,859 | 537.64s | $0.0187 | $58.87 | ||

| Cloud |  |

3 Core / 1,667 Ghz each / 5 Ghz Total | 3 Core / 1,667 Ghz each / 5 Ghz Total | Zurich (Europe) | Amd Opteron 6380 | 2.5 Ghz to 3.4 Ghz with Turbo | 3 | 1 | Ubuntu Server 13.10 64 bits | 1928 to 1675 | 518.64s | $0.1875 | $135 |

| Cloud |  |

M3 Extra Large | m3.xlarge | US East | Intel Xeon E5-2670 | 2.6 | 4 | 15 | Ubuntu 13.04 64 bits | 2,065 | 484.1s | $0.28 | $201,60 |

| Cloud | Linode | 2x priority | 2x priority | Dallas, Texas, US | Intel Xeon E5-2670 | 2.6 | 2 | Ubuntu Server 12.04 64 bits | 2,556 | 391.19s | n/a | $40 | |

| Cloud | GoGrid | Extra Large (8GB) | Extra Large | US-East-1 | Intel Xeon E5520 | 2.27 | 8 | 8 | Ubuntu Server 12.04 64 bits | 2,965 | 327.226s | $0.64 | $290 |

| Cloud |  |

C1 High CPU Extra Large | c1.xlarge | US East | Intel Xeon E5506 | 2.13 | 8 | 7 | Ubuntu Server 13.04 64 bits | 3,101 | 322.39s | $0.52 | $374.40 |

| Dedicated | OVH | Server EG 24G | EG 24G | France | Intel Xeon W3530 | 2.8 | 8 | 24 | Ubuntu Server 13.04 64 bits | 3,881 | 257.01s | n/a | $99 |

| Cloud |  |

M3 2x Extra Large | m3.2xlarge | US East | Intel Xeon E5-2670 v2 | 2.5 | 8 | 30 | Ubuntu Server 13.04 64 bits | 4,156 | 240.597s | $0.56 | $403.2 |

| Cloud |  |

M2 High Memory Quadruple Extra Large | m2.4xlarge | US East | Intel Xeon E5-2665 | 2.4 | 8 | 68.4 | Ubuntu Server 13.04 64 bits | 4,281 | 233.545s | $0.98 | $706.60 |

| Cloud | Rackspace | RackSpace First Generation 30 GB RAM – 8 Cores – 1200 GB | US | Quad-Core AMD Opteron(tm) Processor 2374 HE | 2.2 | 8 | 30 | Ubuntu Server 12.04 64 bits | 4,539 | 220.89s | $1.98 | $1,425.60 | |

| Physical (desktop workstation) | Intel Core i7-4770S | 3.1 (to 3.9 with turbo) | 8 | 32 | Ubuntu Desktop 13.04 64 bits | 5,842 | 171.56s | ||||||

| Cloud | Digital Ocean | Digital Ocean 48GB RAM – 16 Cores – 480 GB SSD | Amsterdam 1 | QEMU Virtual CPU version 1.0 | 16 | 48 | Ubuntu Server 13.04 64 bits | 6,172 | 161.996s | $0.705 | $480 | ||

| Cloud |  |

High I/O Quadruple Extra Large | hi1.4xlarge | US East | Intel Xeon E5620 | 2.4 | 16 | 60.5 | Ubuntu Server 13.04 64 bits | 6,263 | 159.65s | $3.1 | $2,232 |

| Cloud | Digital Ocean | Digital Ocean 64GB RAM – 20 Cores – 640 GB SSD | Amsterdam 1 | QEMU Virtual CPU version 1.0 | 20 | 64 | Ubuntu Server 13.04 64 bits | 8,116 | 123.2s | $0.941 | $640 | ||

| Cloud |  |

Compute Optimized C3 4x Large | c3.4xlarge | US East | Intel Xeon E5-2680 v2 | 2.8 | 16 | 30 | Ubuntu Server 13.04 64 bits | 8,865 | 112.8s | $0.84 | $604.80 |

| Cloud | Digital Ocean | Digital Ocean 96GB RAM – 24 Cores – 960 GB SSD | New York 2 | QEMU Virtual CPU version 1.0 | 24 | 96 | Ubuntu Server 13.04 64 bits | 9,733 | 102.743s | $1.411 | $960 | ||

| Cloud | GoGrid | XXX Large (24GB) | XXX Large | US-East-1 | Intel Xeon X5650 | 2.67 | 32 | 24 | Ubuntu Server 12.04 64 bits | 10,037 | 99.622s | $1.92 | $870 |

| Cloud |  |

24 Core / 52 Ghz Total | 24 Core / 52 Ghz Total | Zurich (Europe) | Amd Opteron 6380 | 2.5 Ghz to 3.4 Ghz with Turbo | 24 | 1 | Ubuntu Server 13.10 64 bits | 10979 to 8530 | 98s | $0.9975 | $718.20 |

| Cloud |  |

Memory Optimized CR1 Cluster 8xlarge | cr1.8xlarge | US East | Intel Xeon E5-2670 | 2.6 | 32 | 244 | Ubuntu Server 13.04 64 bits for HVM instances (Cluster) | 16,468 | 60.721s | $3.5 | $2,520 |

| Cloud |  |

Compute Optimized CC2 Cluster 8xlarge | cc2.8xlarge | US East | Intel Xeon E5-2670 | 2.6 | 32 | 60.5 | Ubuntu Server 13.04 64 bits for HVM instances (Cluster) | 16,608 | 60.21s | $2 | $1,440 |

| Cloud |  |

37 Core / 2.16 Ghz each / 80 Ghz Total | 37 Core / 2.16 Ghz each / 80 Ghz Total | Zurich (Europe) | Amd Opteron 6380 | 2.5 Ghz to 3.4 Ghz with Turbo | 37 | 1 | Ubuntu Server 13.10 64 bits | 17136 to 8539 | 58s | $1.5195 | $1,094.10 |

| Cloud |  |

Compute Optimized C3 8xlarge | c3.8xlarge | US East | Intel Xeon E5-2680 | 2.8 | 32 | 60 | Ubuntu Server 13.10 64 bits for HVM instances (Cluster) | 17,476 | 57.21s | $1.68 | $1,209.6 |

The new Amazon c3.8xlarge based on Intel(R) Xeon(R) CPU E5-2680 v2 @ 2.80GHz and SSD has been added. Is the most powerful instance tested to the date, beating Cloudsigma 37 Cores/80 Ghz by a little (although CloudSigma is much more cheaper).

The cool thing of c3.8xlarge comparing to cc2.8xlarge is that the first is not a Cluster like the last, so you can use a standard Linux distribution, not the specially cluster distributions.

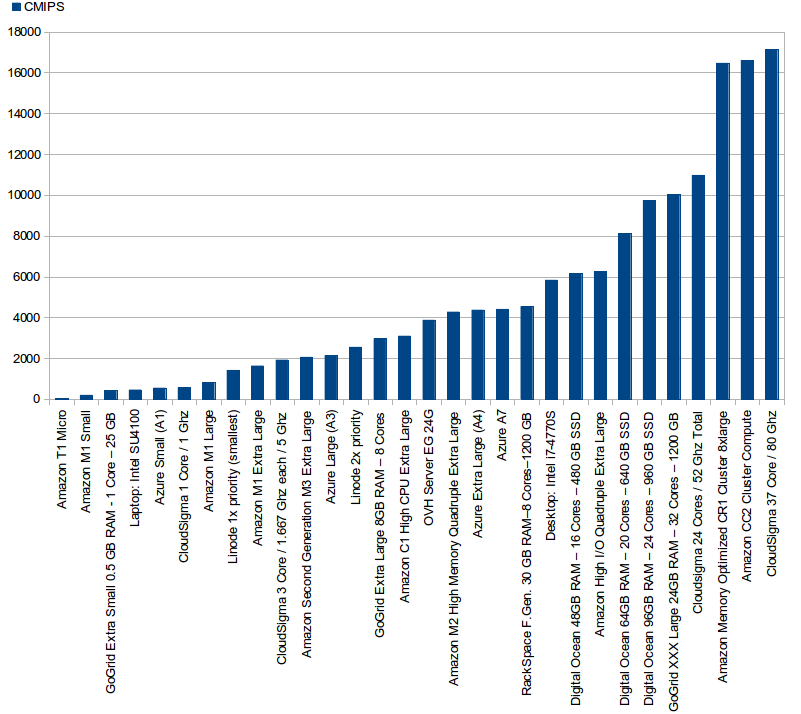

In a graphic (more CMIPS means more CPU+RAM Speed power, so more is better):

Prices for Amazon have been updated, only m3.xlarge (SSD) changed passing from $0.5 USD/hour to $0.45 USD/hour.

http://aws.amazon.com/ec2/pricing/

Prices for Azure have been reviewed but did not change since last update on 2013.

http://www.windowsazure.com/en-us/pricing/calculator/

Detailed results:

| Type of Service | Provider | Name of the product | Codename | Zone | Processor | Ghz Processor | Cores (from htop) | RAM (GB) | Os tested | CMIPS | Execution time (seconds) | USD /hour | USD /month |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Cloud |  |

T1 Micro | t1.micro | US East | Intel Xeon E5-2650 | 2 | 1 | 0.613 | Ubuntu Server 13.04 64 bits | 49 | 20,036.7 | $0.02 | $14.40 |

| Cloud |  |

M1 Small | m1.small | US East | Intel Xeon E5-2650 | 2 | 1 | 1.6 | Ubuntu Server 13.04 64 bits | 203 | 4,909.89 | $0.06 | $43.20 |

| Cloud | GoGrid | Extra Small (512 MB) | Extra Small | US-East-1 | Intel Xeon E5520 | 2.27 | 1 | 0.5 | Ubuntu Server 12.04 64 bits | 441 | 2,265.14 | $0.04 | $18.13 |

| Physical (laptop) | Intel SU4100 | 1.4 | 2 | 4 | Ubuntu Desktop 12.04 64 bits | 460 | 2,170.32 | ||||||

| Cloud | CloudSigma | 1 Core / 1 Ghz | 1 Core / 1 Ghz | Zurich (Europe) | Amd Opteron 6380 | 2.5 Ghz to 3.4 Ghz with Turbo | 1 | 1 | Ubuntu Server 12.04.3 64 bits | 565 to 440 | 1,800 | $0.04475 | $32,22 |

| Cloud |  |

M1 Large | m1.large | US East | Intel Xeon E5-2650 | 2 | 2 | 7.5 | Ubuntu 13.04 64 bits | 817 | 1,223.67 | $0.24 | $172.80 |

| Cloud | Linode | 1x priority (smallest) | 1x priority | London | Intel Xeon E5-2670 | 2.6 | 8 | 1 | Ubuntu Server 12.04 64 bits | 1,427 | 700.348 | n/a | $20 |

| Cloud |  |

M1 Extra Large | m1.xlarge | US East | Intel Xeon E5-2650 | 2 | 4 | 15 | Ubuntu 13.04 64 bits | 1,635 | 606.6 | $0.48 | $345.60 |

| Cloud | LunaCloud | 8 Core 1.5 Ghz, 512 MB RAM, 10 GB SSD | CH | 1.5 | 8 | 0.5 | Ubuntu 13.10 64 bits | 1,859 | 537.64 | $0.0187 | $58.87 | ||

| Cloud | CloudSigma | 3 Core / 1,667 Ghz each / 5 Ghz Total | 3 Core / 1,667 Ghz each / 5 Ghz Total | Zurich (Europe) | Amd Opteron 6380 | 2.5 Ghz to 3.4 Ghz with Turbo | 3 | 1 | Ubuntu Server 13.10 64 bits | 1928 to 1675 | 518.64 | $0.1875 | $135 |

| Cloud |  |

M3 Extra Large | m3.xlarge | US East | Intel Xeon E5-2670 | 2.6 | 4 | 15 | Ubuntu 13.04 64 bits | 2,065 | 484.1 | $0.45 | $324 |

| Cloud | Linode | 2x priority | 2x priority | Dallas, Texas, US | Intel Xeon E5-2670 | 2.6 | 2 | Ubuntu Server 12.04 64 bits | 2,556 | 391.19 | n/a | $40 | |

| Cloud | GoGrid | Extra Large (8GB) | Extra Large | US-East-1 | Intel Xeon E5520 | 2.27 | 8 | 8 | Ubuntu Server 12.04 64 bits | 2,965 | 327.226 | $0.64 | $290 |

| Cloud |  |

C1 High CPU Extra Large | c1.xlarge | US East | Intel Xeon E5506 | 2.13 | 8 | 7 | Ubuntu Server 13.04 64 bits | 3,101 | 322.39 | $0.58 | $417.60 |

| Dedicated | OVH | Server EG 24G | EG 24G | France | Intel Xeon W3530 | 2.8 | 8 | 24 | Ubuntu Server 13.04 64 bits | 3,881 | 257.01 | n/a | $99 |

| Cloud |  |

M2 High Memory Quadruple Extra Large | m2.4xlarge | US East | Intel Xeon E5-2665 | 2.4 | 8 | 68.4 | Ubuntu Server 13.04 64 bits | 4,281 | 233.545 | $1.64 | $1,180.80 |

| Cloud | Rackspace | RackSpace First Generation 30 GB RAM – 8 Cores – 1200 GB | US | Quad-Core AMD Opteron(tm) Processor 2374 HE | 2.2 | 8 | 30 | Ubuntu Server 12.04 64 bits | 4,539 | 220.89 | $1.98 | $1,425.60 | |

| Physical (desktop workstation) | Intel Core i7-4770S | 3.1 (to 3.9 with turbo) | 8 | 32 | Ubuntu Desktop 13.04 64 bits | 5,842 | 171.56 | ||||||

| Cloud | Digital Ocean | Digital Ocean 48GB RAM – 16 Cores – 480 GB SSD | Amsterdam 1 | QEMU Virtual CPU version 1.0 | 16 | 48 | Ubuntu Server 13.04 64 bits | 6,172 | 161.996 | $0.705 | $480 | ||

| Cloud |  |

High I/O Quadruple Extra Large | hi1.4xlarge | US East | Intel Xeon E5620 | 2.4 | 16 | 60.5 | Ubuntu Server 13.04 64 bits | 6,263 | 159.65 | $3.1 | $2,232 |

| Cloud | Digital Ocean | Digital Ocean 64GB RAM – 20 Cores – 640 GB SSD | Amsterdam 1 | QEMU Virtual CPU version 1.0 | 20 | 64 | Ubuntu Server 13.04 64 bits | 8,116 | 123.2 | $0.941 | $640 | ||

| Cloud | Digital Ocean | Digital Ocean 96GB RAM – 24 Cores – 960 GB SSD | New York 2 | QEMU Virtual CPU version 1.0 | 24 | 96 | Ubuntu Server 13.04 64 bits | 9,733 | 102.743 | $1.411 | $960 | ||

| Cloud | GoGrid | XXX Large (24GB) | XXX Large | US-East-1 | Intel Xeon X5650 | 2.67 | 32 | 24 | Ubuntu Server 12.04 64 bits | 10,037 | 99.6226 | $1.92 | $870 |

| Cloud | CloudSigma | 24 Core / 52 Ghz Total | 24 Core / 52 Ghz Total | Zurich (Europe) | Amd Opteron 6380 | 2.5 Ghz to 3.4 Ghz with Turbo | 24 | 1 | Ubuntu Server 13.10 64 bits | 10979 to 8530 | 98 | $0.9975 | $718.20 |

| Cloud |  |

Memory Optimized CR1 Cluster 8xlarge | cr1.8xlarge | US East | Intel Xeon E5-2670 | 2.6 | 32 | 244 | Ubuntu Server 13.04 64 bits for HVM instances (Cluster) | 16,468 | 60.721 | $3.5 | $2,520 |

| Cloud |  |

Compute Optimized CC2 Cluster 8xlarge | cc2.8xlarge | US East | Intel Xeon E5-2670 | 2.6 | 32 | 60.5 | Ubuntu Server 13.04 64 bits for HVM instances (Cluster) | 16,608 | 60.21 | $2.4 | $1,728 |

| Cloud | CloudSigma | 37 Core / 2.16 Ghz each / 80 Ghz Total | 37 Core / 2.16 Ghz each / 80 Ghz Total | Zurich (Europe) | Amd Opteron 6380 | 2.5 Ghz to 3.4 Ghz with Turbo | 37 | 1 | Ubuntu Server 13.10 64 bits | 17136 to 8539 | 58 | $1.5195 | $1,094.10 |

| Cloud |  |

Compute Optimized C3 8xlarge | c3.8xlarge | US East | Intel Xeon E5-2680 | 2.8 | 32 | 60 | Ubuntu Server 13.10 64 bits for HVM instances (Cluster) | 17,476 | 57.21 | $2.4 | $1,728 |

LunaCloud is an interesting provider. They don’t have the most powerful servers, but they have some interesting services like Jelastic -for deploying applications in PHP and Java and consume MongoDB, CouchDB, PostgreSQL, MySql, MariaDBability, Memcached-, cheap Load Balancers (16 USD/month), the ability to launch instances very fast, and with as low as 512 MB of RAM. That’s cool if you need more CPU and less RAM, so you avoid paying that extra.

They allow also to reserve an amount of bandwidth for the instance, that’s pretty interesting.

Like CloudSigma they allow you to balance the amount of RAM you want for the instance, and they allow to choose between 1 to 8 cores at 1,500 Ghz. That part is the most limited as the most powerful server, 8 cores, scores 1859 CMIPS, in the low band. In the other hand the great part is that the price for a 8 CPU with 512 MB of RAM is very interesting.

LunaCloud 8 cores at 1,500 Ghz (max.) scores a bit more than an Amazon M1 Extra Large, but it costs a fraction than the Amazon’s. 0.0817 USD/hour per LunaCloud instance, 0.48 USD/hour per Amazon’s m1.xlarge instance.

LunaCloud has the cheapest price per unit of power for a public instance, only over Linode. But taking in count that Linode charges per day, and so is not useful for Scaling up and down we can say that LunaCloud has the cheapest price per unit of power from the providers reviewed up to date (as said CPU maxed and memory minimized to 512 MB).

Also they are the only analyzed to the date with Data Centers in Lisbon (Portugal) and Paris (France).

Thanks to LunaCloud for providing 300 € in free credit to do the benchmarks.

We also add one of the physical servers from hetzner.de to be precise the EX-40 SSD (200 GB SSD in Raid 1) 32 GB RAM as it has an unbeatable price 81 USD/month (59 €/month *) for the CPU power that brings, and the amount of memory.

* Please note that this model has a one-time setup fee of 59 € that we did not include in the price per hour.

It is interesting to see that this server from hetzner has an Intel Core i7-4770 CPU @ 3.40GHz CPU scoring 6172 CMIPS, almost a 6% more, comparing to the performance of my workstation equipped with an Intel Core i7-4770S scoring 5842 CMIPS.

This dedicated physical server costing 80 USD/month scores 6172 CMIPS that is the same score than the Digital Ocean 48GB RAM – 16 Cores – 480 GB SSD costing 480 USD per month, and scores much more than the RackSpace First Generation 30 GB RAM – 8 Cores – 1200 GB costing 1425.6 USD/month and scoring 4539 CMIPS or the M2 High Memory Quadruple Extra Large with 64 GB RAM costing 1180 USD/month and scoring 4281 CMIPS.

The graphic comparing the CPU performance (CMIPS score):

The graphics of the costs, CUP – Cost of Unit Process:

As you can see a dedicated server brings a lot of performance at the cheapest price.

Here are provided the CMIPS results after the past tests with Microsoft Azure and CloudSigma Cloud.

* Please note that values published in the graph for CloudSigma’s CMIPS are the max obtained, as results fluctuated during the tests, as indicated in the review. The range is below, on the html table.

* Please note that values published in the graph for CloudSigma’s CMIPS are the max obtained, as results fluctuated during the tests, as indicated in the review. The range is below, on the html table.

*2 Costs for CloudSigma came in EUR, so have been exchanged from EUR to USD using google. Prices show subscription (not burst mode)

And the CUP (Cost per Unit Process), the orange bar.

Detailed results:

| Type of Service | Provider | Name of the product | Codename | Zone | Processor | Ghz Processor | Cores (from htop) | RAM (GB) | Os tested | CMIPS | Execution time (seconds) | USD /hour | USD /month |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Cloud | Amazon | T1 Micro | t1.micro | US East | Intel Xeon E5-2650 | 2 | 1 | 0.613 | Ubuntu Server 13.04 64 bits | 49 | 20,036.7 | $0.02 | $14.40 |

| Cloud | Amazon | M1 Small | m1.small | US East | Intel Xeon E5-2650 | 2 | 1 | 1.6 | Ubuntu Server 13.04 64 bits | 203 | 4,909.89 | $0.06 | $43.20 |

| Cloud | GoGrid | Extra Small (512 MB) | Extra Small | US-East-1 | Intel Xeon E5520 | 2.27 | 1 | 0.5 | Ubuntu Server 12.04 64 bits | 441 | 2,265.14 | $0.04 | $18.13 |

| Physical (laptop) | Intel SU4100 | 1.4 | 2 | 4 | Ubuntu Desktop 12.04 64 bits | 460 | 2,170.32 | ||||||

| Cloud | CloudSigma | 1 Core / 1 Ghz | 1 Core / 1 Ghz | Zurich (Europe) | Amd Opteron 6380 | 2.5 Ghz to 3.4 Ghz with Turbo | 1 | 1 | Ubuntu Server 12.04.3 64 bits | 565 to 440 | 1,800 | $0.04475 | $32,22 |

| Cloud | Amazon | M1 Large | m1.large | US East | Intel Xeon E5-2650 | 2 | 2 | 7.5 | Ubuntu 13.04 64 bits | 817 | 1,223.67 | $0.24 | $172.80 |

| Cloud | Linode | 1x priority (smallest) | 1x priority | London | Intel Xeon E5-2670 | 2.6 | 8 | 1 | Ubuntu Server 12.04 64 bits | 1,427 | 700.348 | n/a | $20 |

| Cloud | Amazon | M1 Extra Large | m1.xlarge | US East | Intel Xeon E5-2650 | 2 | 4 | 15 | Ubuntu 13.04 64 bits | 1,635 | 606.6 | $0.48 | $345.60 |

| Cloud | CloudSigma | 3 Core / 1,667 Ghz each / 5 Ghz Total | 3 Core / 1,667 Ghz each / 5 Ghz Total | Zurich (Europe) | Amd Opteron 6380 | 2.5 Ghz to 3.4 Ghz with Turbo | 3 | 1 | Ubuntu Server 13.10 64 bits | 1928 to 1675 | 518.64 | $0.1875 | $135 |

| Cloud | Amazon | M3 Extra Large | m3.xlarge | US East | Intel Xeon E5-2670 | 2.6 | 4 | 15 | Ubuntu 13.04 64 bits | 2,065 | 484.1 | $0.50 | $360 |

| Cloud | Linode | 2x priority | 2x priority | Dallas, Texas, US | Intel Xeon E5-2670 | 2.6 | 2 | Ubuntu Server 12.04 64 bits | 2,556 | 391.19 | n/a | $40 | |

| Cloud | GoGrid | Extra Large (8GB) | Extra Large | US-East-1 | Intel Xeon E5520 | 2.27 | 8 | 8 | Ubuntu Server 12.04 64 bits | 2,965 | 327.226 | $0.64 | $290 |

| Cloud | Amazon | C1 High CPU Extra Large | c1.xlarge | US East | Intel Xeon E5506 | 2.13 | 8 | 7 | Ubuntu Server 13.04 64 bits | 3,101 | 322.39 | $0.58 | $417.60 |

| Dedicated | OVH | Server EG 24G | EG 24G | France | Intel Xeon W3530 | 2.8 | 8 | 24 | Ubuntu Server 13.04 64 bits | 3,881 | 257.01 | n/a | $99 |

| Cloud | Amazon | M2 High Memory Quadruple Extra Large | m2.4xlarge | US East | Intel Xeon E5-2665 | 2.4 | 8 | 68.4 | Ubuntu Server 13.04 64 bits | 4,281 | 233.545 | $1.64 | $1,180.80 |

| Cloud | Rackspace | RackSpace First Generation 30 GB RAM – 8 Cores – 1200 GB | US | Quad-Core AMD Opteron(tm) Processor 2374 HE | 2.2 | 8 | 30 | Ubuntu Server 12.04 64 bits | 4,539 | 220.89 | $1.98 | $1,425.60 | |

| Physical (desktop workstation) | Intel Core i7-4770S | 3.1 (to 3.9 with turbo) | 8 | 32 | Ubuntu Desktop 13.04 64 bits | 5,842 | 171.56 | ||||||

| Cloud | Digital Ocean | Digital Ocean 48GB RAM – 16 Cores – 480 GB SSD | Amsterdam 1 | QEMU Virtual CPU version 1.0 | 16 | 48 | Ubuntu Server 13.04 64 bits | 6,172 | 161.996 | $0.705 | $480 | ||

| Cloud | Amazon | High I/O Quadruple Extra Large | hi1.4xlarge | US East | Intel Xeon E5620 | 2.4 | 16 | 60.5 | Ubuntu Server 13.04 64 bits | 6,263 | 159.65 | $3.1 | $2,232 |

| Cloud | Digital Ocean | Digital Ocean 64GB RAM – 20 Cores – 640 GB SSD | Amsterdam 1 | QEMU Virtual CPU version 1.0 | 20 | 64 | Ubuntu Server 13.04 64 bits | 8,116 | 123.2 | $0.941 | $640 | ||

| Cloud | Digital Ocean | Digital Ocean 96GB RAM – 24 Cores – 960 GB SSD | New York 2 | QEMU Virtual CPU version 1.0 | 24 | 96 | Ubuntu Server 13.04 64 bits | 9,733 | 102.743 | $1.411 | $960 | ||

| Cloud | GoGrid | XXX Large (24GB) | XXX Large | US-East-1 | Intel Xeon X5650 | 2.67 | 32 | 24 | Ubuntu Server 12.04 64 bits | 10,037 | 99.6226 | $1.92 | $870 |

| Cloud | CloudSigma | 24 Core / 52 Ghz Total | 24 Core / 52 Ghz Total | Zurich (Europe) | Amd Opteron 6380 | 2.5 Ghz to 3.4 Ghz with Turbo | 24 | 1 | Ubuntu Server 13.10 64 bits | 10979 to 8530 | 98 | $0.9975 | $718.20 |

| Cloud | Amazon | Memory Optimized CR1 Cluster 8xlarge | cr1.8xlarge | US East | Intel Xeon E5-2670 | 2.6 | 32 | 244 | Ubuntu Server 13.04 64 bits for HVM instances (Cluster) | 16,468 | 60.721 | $3.5 | $2,520 |

| Cloud | Amazon | Compute Optimized CC2 Cluster 8xlarge | cc2.8xlarge | US East | Intel Xeon E5-2670 | 2.6 | 32 | 60.5 | Ubuntu Server 13.04 64 bits for HVM instances (Cluster) | 16,608 | 60.21 | $2.4 | $1,728 |

| Cloud | CloudSigma | 37 Core / 2.16 Ghz each / 80 Ghz Total | 37 Core / 2.16 Ghz each / 80 Ghz Total | Zurich (Europe) | Amd Opteron 6380 | 2.5 Ghz to 3.4 Ghz with Turbo | 37 | 1 | Ubuntu Server 13.10 64 bits | 17136 to 8539 | 58 | $1.5195 | $1,094.10 |

CloudSigma has been one of the most difficult-to-test providers to the date for me.

Mainly because they have several differences with the average Cloud provider and the common way to perform of the vast majority of projects I’ve evaluated, and because the user interface is a bit ambiguous.

I’ll explain those differences and how to perform with CloudSigma.

First of all I want to thank to the Company and to the Customer Support that has been very nice.

CloudSigma assigned one of the support representatives to me, and she has been replying all my in deep questions, they provided 200 € in free credit so I can evaluate their platform at full level, they have provided a lot of technical information on their internals and platform, and have forwarded the bugs I’ve found to their Engineers and kept up me up to date on the development cycle and when they were going to fix the bugs I reported.

I have to clarify that I have found bugs in all the Cloud platforms I’ve tested, and in fact that’s one of my skills, finding bugs, that my employers love so much, but the main difference is that CloudSigma has been the only provider that has informed me about when they would fix the bugs and provided details on the Sprint (agile) and on the schedule for the deployment of the fixes.

I’ve evaluated their API and reported doubts and missing info on the documentation, and they have been transparent to reply all my questions and to recognize the points that I reported to improve. So big congratulations to their wonderful team, and for the good attitude.

For the development lovers I would say that their user interface is new, and works with Html5 WebSockets.

CloudSigma allows you to work with subscription and with burst mode. In burst mode you can pre-charge money to your account and work. To start an instance in burst mode you have to have enough credit to run the instance for 5 days.

One of the differences is that you can create an instance without a disk. This is a bit confusing the first time.

You have to create an instance, then assign a disk.

The disk can be assigned from, and that’s very good, one ISO Raw image that you upload.

It can be even created from a Marketplace’s image.

You can clone and use from the Marketplace OpenBSD, RedHat, Slackware, Debian, Ubuntu… pre-installed images (although Ubuntu 13.10 Server was not still in the list, I must say that after the publication of this article it was immediately added. Great!) or you can assign installation CD’s in ISO format and do a clean install. You can also use Windows and Sql Server images.

There are images, in the marketplace, for the drivers.

A positive thing also is that you have all the variety of Linux Desktops. So if you want to install Ubuntu 13.10 Desktop, Debian, or Fedora… you can do it and access through VNC.

In the other hand you can move the main or system disk from one server to another, that’s pretty cool.

The disks are SSD, and that’s very good for performance. You can create a Hard disk from 1 GB to 3 TB SSD.

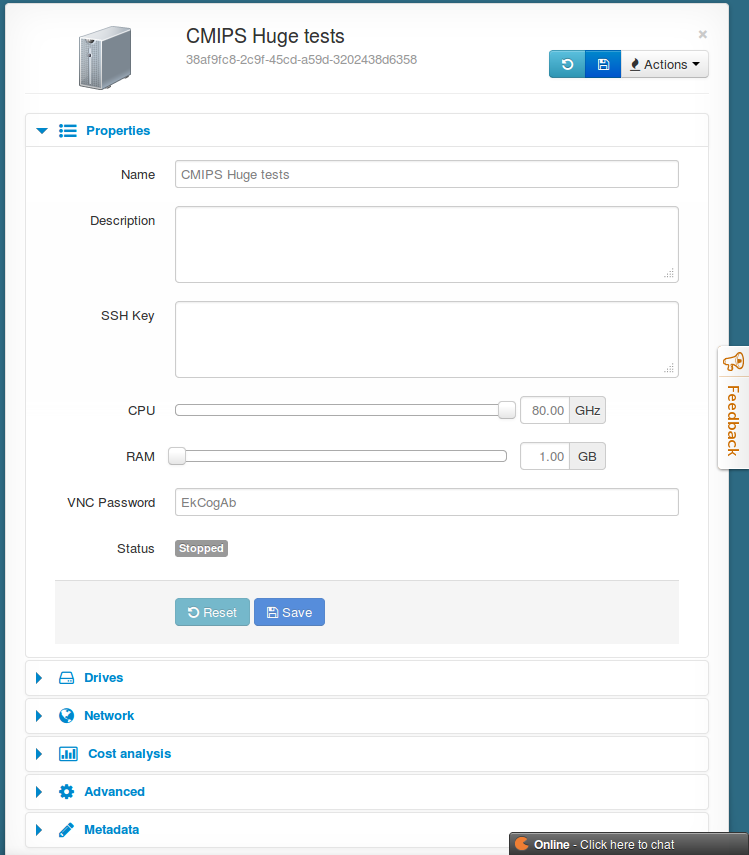

CloudSigma platform is different from providers that have fixed instance-size, and here you can define how many Ghz (processor frequency) and how many RAM GB you want.

That’s a very interesting feature, as if you want a really powerful instance, but you don’t need much RAM, then you don’t have to pay for it!. Also if you want to create a cluster of Cassandra servers with not much CPU but a lot of RAM you can do it.

You can stop your server and change the amounts later and restart. That easy.

That’s really a very cool provisioning feature.

In terms of Zones CloudSigma has Datacenters in Zurich (Europe) and Las Vegas (USA), they run double 10GigE lines and they offer hybrid private patching (direct cable) that is a direct connection from your co-location servers to the public cloud, eliminating the need of a VPN and offering the possibility to contract advanced tools for security, backups…

As me they believe that hybrid cloud is key.

CloudSigma is a Cloud provider that remembers a lot to a familiar company. They have advanced services, but the relation with the customers is exquisite. They care about the companies being happy there like if it was a very small company. Some of my friend’s Start ups work with CloudSigma and they’re very satisfied.

I found and they confirmed, that are running on latest AMD 6380 processors (16 cores from 2.5 Ghz to 3.4 Ghz with Turbo) and they can offer up to 80 Ghz (80 virtual cores at 1 Ghz) but unless I’m missing something I don’t see the maths working (3.4 * 16 = 54.4 Ghz).

The RAM can be from 1 GB to 128 GB, that’s very good.

Another cool feature is that you can balance the number of cores seen by the guest OS, and play with the Ghz of that cores. So you can have 80 cores at 1 Ghz or 37 cores at 2.16 Ghz.

This is a very cool feature.

However, in the other hand the CPU performance detected by CMIPS is not always the same. It varies a lot from one test to the next and the next.

For a full 80 Ghz (37 cores at 2.16 Ghz each) instance, I got measurements from astonishing 17136 to 8539 CMIPS. That is shocking as I got 10770 to 8530 CMIPS with a 52 Ghz (24 virtual cores) instance.

I believe that this is related to AMD power-consumption features, and not related to CloudSigma itself, but right now I’m not sure and I would need a lot more testing to be.

Please note 17136 is the highest score achieved, even better than the powerful Amazon Compute Optimized CC2 Cluster 8xlarge.

In CloudSigma you can also use all the available bandwidth. Your instances are only limited by the ability of the server to handle network packets. So you don’t have a limit like in the case of other Cloud providers capping the outbound to 150 Mbits or more depending on the instance size.

Launching instances with CloudSigma is really really fast. The drive images are cloned at light speed (10 GB 3 seconds), and the servers are created in seconds and started even faster. Unlikely other Cloud providers the servers are created stopped.

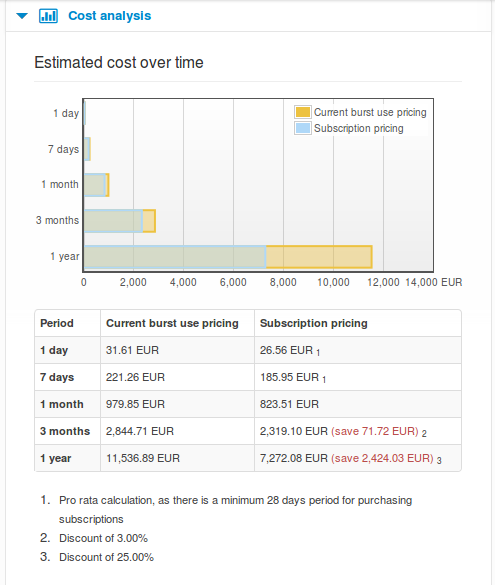

Another cool feature is the incorporated costs estimation:

The image shows the price for a 80 Ghz instance, with 1 GB of RAM.

The image shows the price for a 80 Ghz instance, with 1 GB of RAM.

As you can see prices per subscription are cheaper than with the burst mode.

In fact, CloudSigma has the most clear and predictable pricing model. You can check everything from the profile:

Using a pre-installed image has the advantage that all the drivers are pre-installed.

Another confusing option is the public key option, that you can define when you create your instance or modify it later. As I’ve been using images from the Marketplace, that are user (cloudsigma) and password based, not certificate-based, it seems like this is not an useful option for this scenario and is really confusing.

Another confusing option is the public key option, that you can define when you create your instance or modify it later. As I’ve been using images from the Marketplace, that are user (cloudsigma) and password based, not certificate-based, it seems like this is not an useful option for this scenario and is really confusing.

You can access their API from here: https://zrh.cloudsigma.com/docs/

Something that I dislike from the user interface is that the STOP button has no warning. You press STOP and you immediately stop the instance (like power off), and shutdown has a 90 seconds count down.

After you have created an instance, assigned the disk, and started it, you have to:

– Edit the instance Properties

– Go to menu Actions

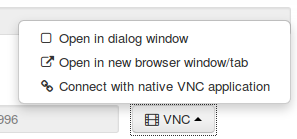

– Select Open VNC

This opens a set of options at the bottom of the same section

– Log in via SSH or via the embedded VNC client (very nice)

The VNC client works well, unfortunately the key map from the keyboard is different, so I am not able to use certain characters like # or | that are generated with AltGr.

The VNC client works well, unfortunately the key map from the keyboard is different, so I am not able to use certain characters like # or | that are generated with AltGr.

This is a problem for the default password of Ubuntu 12.04 Server, that user a #.

Specially painful with the laptop.

But you can access via ssh instead, and the keyboard mapping is right.

Then you must change the password for the user cloudsigma, via ssh it kicks you out after, and you must relogin with the new password.

The network polices (firewall) can be applied to several servers, but if they’re changed the new ones don’t apply until restart.

Fortunately you can access through the VNC web client (QEMU) even if you messed it up and blocked all the firewall ports.

You can see in real time your bandwidth consumption.